#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

If you want to exclude a number of crawlers, like googlebot and bing for instance, it’s okay to make use of a number of robot exclusion tags. In the method of crawling the URLs in your web site, a crawler could encounter errors.

The Evolution Of Seo

ash your Hands and Stay Safe during Coronavirus (COVID-19) Pandemic – JustCBD https://t.co/XgTq2H2ag3 @JustCbd pic.twitter.com/4l99HPbq5y

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

It’s essential to be sure that search engines are capable of uncover all of the content you need indexed, and not just your homepage. Googlebot starts out by fetching a number of net pages, after which follows the hyperlinks on these webpages to find new URLs. Crawling is the invention course of in which search engines like google send out a team of robots (often known as crawlers or spiders) to seek out new and updated content material.

But, why have we gone on to provide such importance to this field of web optimization? We will provide some gentle on the crawling and its incidence as a variable for the ranking of positions in Google. Pages identified to the search engine are crawled periodically to find out whether any modifications have been made to the page’s content because the last time it was crawled.

Buy CBD Online – CBD Oil, Gummies, Vapes & More – Just CBD Store https://t.co/UvK0e9O2c9 @JustCbd pic.twitter.com/DAneycZj7W

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

Crawlers

It also shops all of the external and inner links to the web site. The crawler will go to the stored hyperlinks at a later cut-off date, which is the way it moves from one web site to the next.

Next, the crawlers (typically referred to as spiders) follow your links to the other pages of your web site, and gather extra data. A crawler is a program utilized by search engines to collect knowledge from the internet. When a crawler visits a web site, it picks over the entire website’s content (i.e. the textual content) and stores it in a databank.

You can go to Google Search Console’s “Crawl Errors†report to detect URLs on which this may be occurring – this report will show you server errors and not discovered errors. Ensure that you just’ve solely included URLs that you really want indexed by search engines like google and yahoo, and remember to give crawlers consistent directions. Sometimes a search engine will have the ability to find parts of your website by crawling, however different pages or sections might be obscured for one purpose or one other.

Explode your B2B sales with our Global Vape Shop Database and Vape Store Email List. Our Global Vape Shop Database contains contact details of over 22,000 cbd and vape storeshttps://t.co/EL3bPjdO91 pic.twitter.com/JbEH006Kc1

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Keyworddit

Creating lengthy and quality content material is each useful for customers and search engines like google and yahoo. I have additionally carried out these methods and it really works great for me. In addition to the above, you can make use of structured information to explain your content material to search engines like google in a way they can perceive. Your total aim with content material search engine optimization is to put in writing SEO friendly content material in order that it may be understood by search engines like google but at the similar time to fulfill the person intent and keep them joyful. Search engine optimization or SEO is the method of optimizing your web site for achieving the greatest possible visibility in search engines like google and yahoo.

Therefore we do want to have a page that the search engines can crawl, index and rank for this keyword. So we’d ensure that this is potential by way of our faceted navigation by making the hyperlinks clear and straightforward to search out. Upload your log information to Screaming Frog’s Log File Analyzer verify search engine bots, check which URLs have been crawled, and examine search bot data.

Recovering From Data Overload In Technical Seo

Or, if you elect to make use of “nofollow,” the major search engines is not going to observe or move any hyperlink fairness via to the hyperlinks on the page. By default, all pages are assumed to have the “observe” attribute. How does Google know which model of the URL to serve to searchers?

If a search engine detects changes to a page after crawling a web page, it’s going to replace it’s index in response to these detected modifications. Now that you simply’ve got a high degree understanding about how search engines work, let’s delve deeper into the processes that search engine and net crawlers use to know the web. Of course, because of this the page’s rating potential is lessened (since it could possibly’t really analyze the content material on the page, subsequently the rating signals are all off-web page + domain authority).

After a crawler finds a page, the search engine renders it identical to a browser would. In the method of doing so, the search engine analyzes that web page’s contents. At this level, Google decides which keywords and what rating in every keyword search your page Facebook Groups Scraper will land. This is done by avariety of factorsthat finally make up the complete enterprise of SEO. Also, any links on the listed page is now scheduled for crawling by the Google Bot.

Crawling means to go to the hyperlink by Search engines and indexing means to put the page contents in Database (after analysis) and make them available in search results when a request is made. Crawling means the search engine robot crawl or fetch the online pages whereas Indexing means search engine robot crawl the online pages, saved the information and it appear within the search engine. Crawling is the primary part of working on any search engine like Google. After crawling course of search engine renders knowledge collected from crawling, this process is known as Indexing. Never get confused about crawling and indexing as a result of both are various things.

A Technical Seo Guide To Crawling, Indexing And Ranking

After your page is indexed, Google then comes up with how your page ought to be found in their search. What getting crawled meansis that Google is looking on the web page. Depending on whether or not or not Google thinks the content is “New†or in any other case has one thing to “give to the Internet,†it could schedule to be listed which means it hasthepossibility of rating. As you can see, crawling, indexing, and ranking are all core parts of search engine optimisation.

And that’s why all these three sides have to be allowed to work as easily as potential. The above internet addresses are added to a ginormous index of URLs (a bit like a galaxy-sized library). The pages are fetched from this database when an individual searches for data for which that exact page is an correct match. It’s then displayed on the SERPs (search engine results web page) together with nine different potentially relevant URLs. After this level,the Google crawler will begin the method of tracking the portal, accessing all of the pages by way of the assorted inside hyperlinks that we have created.

It is all the time a good idea to run a fast, free search engine optimization report in your website also. The greatest, automated SEO audits will present data in your robots.txt file which is an important file that lets search engines and crawlers know in the event that they CAN crawl your web site. It’s not only those hyperlinks that get crawled; it is mentioned that the Google bot will search up to five sites again. That means if a page is linked to a page, which linked to a web page, which linked to a web page which linked to your page (which simply got indexed), then all of them will be crawled.

If you’ve ever seen a search result the place the outline says something like “This page’s description is not obtainable due to robots.txtâ€, that’s why. But SEO for content has sufficient specific variables that we’ve given it its personal part. Start right here should you’re interested in keyword analysis, how to write search engine optimization-friendly copy, and the type of markup that helps search engines like google and yahoo understand simply what your content is actually about.

Content can differ — it could possibly be a webpage, an image, a video, a PDF, and so forth. — however whatever the format, content is discovered by hyperlinks. A search engine like Google consists of a crawler, an index, and an algorithm.

- These can help search engines like google find content hidden deep within an internet site and may provide webmasters with the power to raised management and understand the areas of website indexing and frequency.

- Sitemaps contain units of URLs, and can be created by a website to supply search engines like google and yahoo with an inventory of pages to be crawled.

- After a crawler finds a page, the search engine renders it just like a browser would.

- Once you’ve ensured your website has been crawled, the following order of business is to ensure it can be listed.

- In the earlier section on crawling, we discussed how search engines like google and yahoo uncover your web pages.

- That’s right — just because your web site could be discovered and crawled by a search engine doesn’t necessarily mean that it is going to be saved in their index.

By this process the crawler captures and indexes every website that has links to no less than one different web site. Advanced, mobile app-like websites are very good and handy for customers, but it is not potential to say the same for search engines like google. Crawling and indexing web sites the place content material is served with JavaScript have become quite complicated processes for search engines.

To be sure that your page will get crawled, you must have an XML sitemap uploaded to Google Search Console (formerly Google Webmaster Tools) to provide Google the roadmap for all your new content material. If the robots meta tag on a specific page blocks the search engine from indexing that web page, Google will crawl that page, however gained’t add it to its index.

Sitemaps comprise units of URLs, and could be created by an internet site to offer search engines like google and yahoo with an inventory of pages to be crawled. These can help search engines like google find content material hidden deep inside a web site and can present webmasters with the flexibility to better management and understand the areas of web site indexing and frequency. Once you’ve ensured your web site has been crawled, the following order of business is to verify it may be indexed. That’s right — simply because your web site may be found and crawled by a search engine doesn’t necessarily imply that it will be stored in their index. In the earlier section on crawling, we mentioned how search engines like google and yahoo discover your net pages.

We’re positive that Google follows the event means of UI applied sciences extra closely than we do. Therefore, Google will have the ability to work with JavaScript extra effectively over time, increasing the speed of crawling and indexing. But till then, if we wish to use the benefits of contemporary UI libraries and at the same time keep away from any disadvantages by way of web optimization, we’ve to strictly observe the developments. Google would not have to obtain and render JavaScript recordsdata or make any extra effort to browse your content material. All your content material already is available in an indexable means in the HTML response.

This could take a number of hours, and even days, depending on how much Google values your web site. It indexes a model of your content material crawled with JavaScript. We want to add that this process might take weeks if your web site is new. JavaScript web optimization LinkedIn Profile Scraper is basically the entire work accomplished for search engines like google to be able to smoothly crawl, index and rank web sites the place a lot of the content is served with JavaScript.

You really should know which URLs Google is crawling in your website. The solely ‘actual’ method of understanding that is looking at your site’s server logs. For larger sites, I personally prefer using Logstash + Kibana. For smaller sites, the blokes at Screaming Frog have launched fairly a pleasant little software, aptly known as search engine optimization Log File Analyser (notice the S, they’re Brits). Crawling (or spidering) is when Google or another search engine send a bot to a web web page or internet publish and “read†the page.

Don’t let this be confused with having that page being indexed. Crawling is the first a part of having a search engine acknowledge your web page and show it in search outcomes. Having your page crawled, nevertheless, does not essentially imply your web page was listed and might be discovered.

If you’re constantly including new pages to your web site, seeing a gradual and gradual improve within the pages indexed most likely signifies that they are being crawled and indexed accurately. On the other aspect, if you see a giant drop (which wasn’t anticipated) then it might indicate problems and that the various search engines are not able to access your website correctly. Once you’re pleased that the search engines are crawling your web site appropriately, it is time to monitor how your pages are literally being listed and actively monitor for problems. As a search engine’s crawler moves by way of your site it’s going to also detect and record any links it finds on these pages and add them to a list that will be crawled later. Crawling is the process by which search engines discover up to date content on the net, corresponding to new websites or pages, modifications to present websites, and dead links.

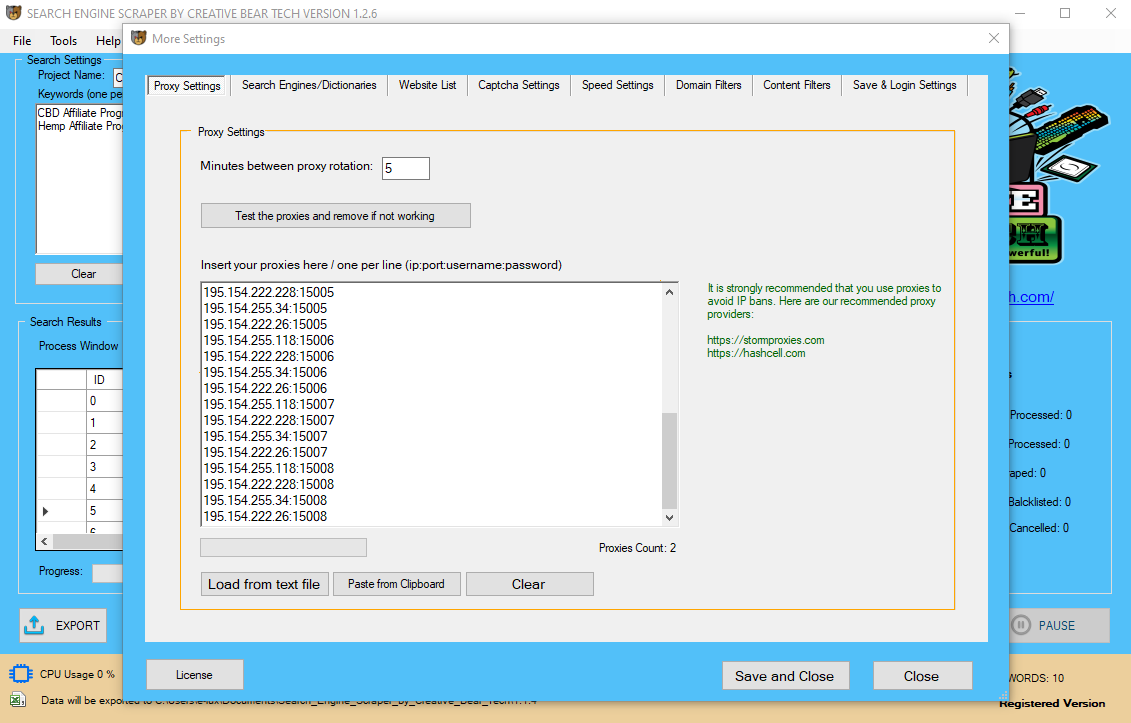

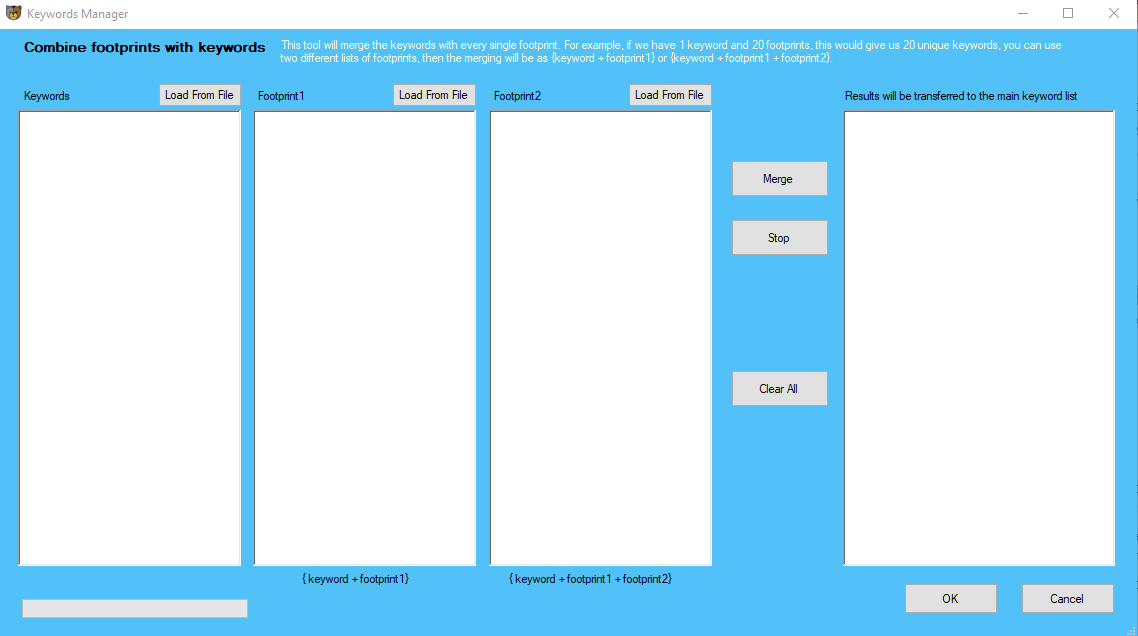

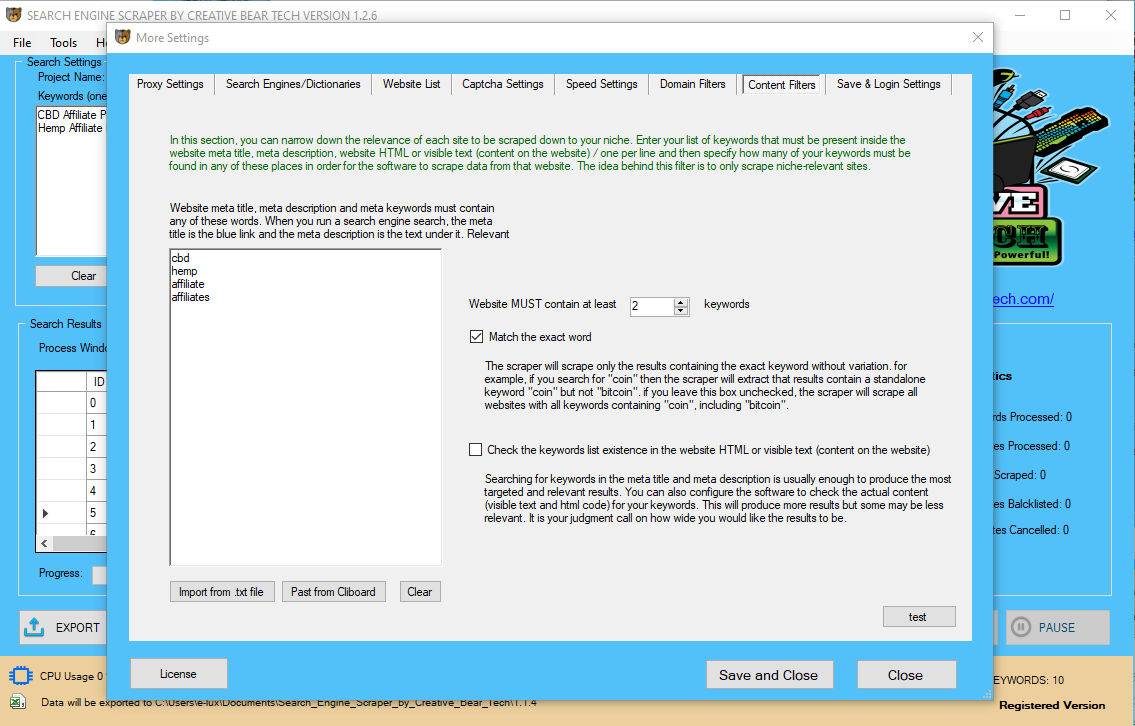

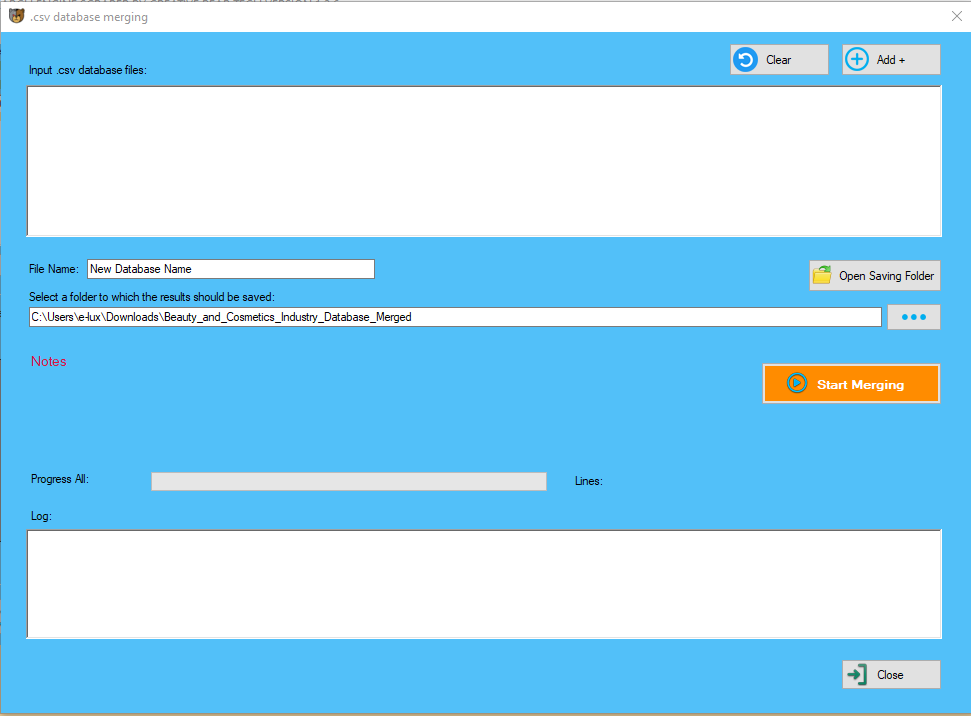

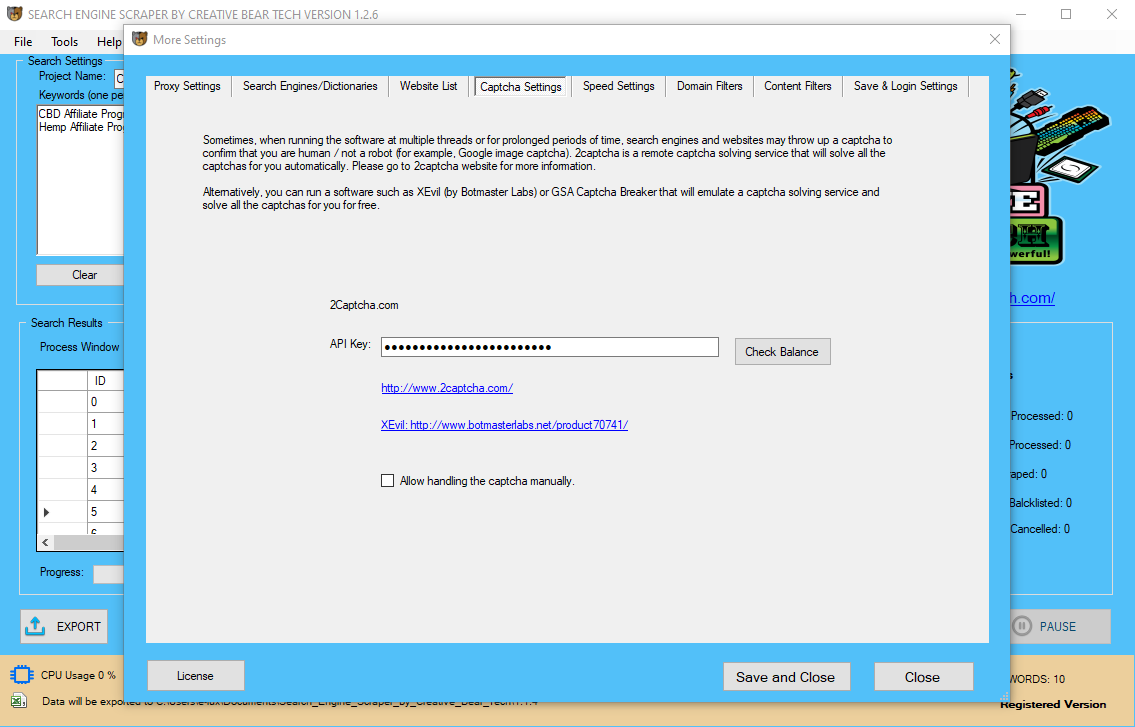

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

When Google’s crawler finds your website, it’ll learn it and its content is saved in the index. Several occasions could make Google feel a URL has to be crawled. A crawler like Googlebot will get an inventory of URLs to crawl on a site.

Your server log files will report when pages have been crawled by the search engines (and other crawlers) in addition to recording visits from people too. You can then filter these log information to find precisely how Googlebot crawls your web site for instance. This may give you nice insight into which ones are being crawled essentially the most and importantly, which ones don’t seem like crawled in any respect. Now we know that a keyword corresponding to “mens waterproof jackets†has an honest quantity of keyword volume from the Adwords keyword device.

In this submit you will learn what’s content material web optimization and tips on how to optimize your content material for search engines and users utilizing finest practices. In quick, content material SEO is about creating and optimizing your content material so that may it probably rank excessive in search engines and appeal to search engine visitors. Having your pageIndexed by Googleis the following step after it will get crawled. As said, it doesn’t imply thatevery site that gets crawled get listed, however each site listed needed to be crawled.If Google deems your new web page worthy, then Google will index it.

This is finished by a wide range of elements that in the end make up the entire enterprise of web optimization. Content web optimization is an important part of the on-page SEO process. Your general goal is to offer both users and search engines the content they’re in search of. As acknowledged by Google, know what your readers want and provides it to them.

Very early on, search engines wanted help determining which URLs were more trustworthy than others to help them determine the way to rank search results. Calculating the variety of hyperlinks pointing to any given site helped them do that. This instance excludes all search engines from indexing the web page and from following any on-page hyperlinks.

Crawling is the process by which a search engine scours the web to search out new and updated net content. These little bots arrive on a page, scan the page’s code and content material, after which comply with links present on that page to new URLs (aka net addresses). Crawling or indexing is a part of the method of getting ‘into’ the Google index.in this process begins with web crawlers – search engine robots that crawl all over your home page and collect knowledge.

It grabs your robots.txt file each every so often to verify it’s still allowed to crawl every URL after which crawls the URLs one after the other. Once a spider has crawled a URL and it has parsed the contents, it adds new URLs it has found on that page that it has to crawl back on the to-do listing. To make sure that your page gets crawled, you should have an XML sitemap uploaded toGoogle Search Console(formerly Google Webmaster Tools) to offer Google the roadmap for all your new content.

That’s what you want if those parameters create duplicate pages, but not ideal if you would like those pages to be listed. Crawl finances is most essential on very giant sites with tens of 1000’s of URLs, but it’s by no means a foul concept to dam crawlers from accessing the content you definitely don’t care about. Just make sure not to block a crawler’s entry to pages you’ve added different directives on, similar to canonical or noindex tags. If Googlebot is blocked from a page, it gained’t be capable of see the directions on that page.

Crawling implies that Googlebot seems in any respect the content/code on the page and analyzes it. Indexing means that the page is eligible to show up in Google’s search results. The course of to check the web site content or updated content material and acquire the information send that to the search engine known as crawling. The above entire process is called crawling and indexing in search engine, web optimization, and digital marketing world.

All business search engine crawlers begin crawling a web site by downloading its robots.txt file, which contains guidelines about what pages search engines like google should or should not crawl on the web site. The robots.txt file may comprise details about sitemaps; this contains lists of URLs that the location desires a search engine crawler to crawl. Crawling and indexing are two distinct things and that is generally misunderstood within the web optimization trade.

observe/nofollow tells search engines like google whether hyperlinks on the web page should be adopted or nofollowed. “Follow†leads to bots following the hyperlinks in your page and passing link equity by way of to these URLs.

Exercise at Home to Avoid the Gym During Coronavirus (COVID-19) with Extra Strength CBD Pain Cream https://t.co/QJGaOU3KYi @JustCbd pic.twitter.com/kRdhyJr2EJ

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

Small Seo Tools

So you do not need applied sciences corresponding to two-wave indexing or dynamic rendering on your content material to achieve recognition and be ranked in Google. GoogleBot adds your website to the rendering queue for the second wave of indexing and accesses it to crawl its JavaScript assets.

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

— Creative Bear Tech (@CreativeBearTec) June 16, 2020